Virtual Keyboard

Virtual Keyboard

In this project we have tried to reduce the gap between the real world and the augmented environment to produce a mixed reality system.

For that purpose, we created a virtually controllable keyboard system which is created and implemented using OpenCV libraries and Python. We employ a web camera which is integrated with OpenCV libraries through a compiler.

Using our system, user can control a virtual keyboard using their finger movements and finger tips. The, user selects an alphabet with their fingertip and move the keyboard with the help of hand gestures.

We implemented Virtual Keyboard to communicate with system using Hand Gestures and with out any additional hardware but by using the webcam available in the system. The webcam simply captures the consecutive frames and compares them to recognize it as a click if there is a different in the contour.

Functionalities:

In this project we implements the functionalities like,

- Creating a Frame

- Hand Gesture Detection (find and segment the hand region from the video sequence.)

- Virtual Typing

And more Functionalities of Keyboard that we add further like, Copy/Cut/Paste/Mute/Unmute

Automotive

Lighting System

HUD

Biometric Access

Others

Consumer Electronics

Smartphone

Laptops & Tablets

Gaming Console

Smart TV

Set-Top Box

Head-Mount Display (HMD)

Others

Healthcare

Sign Language

Lab & Operating Rooms

Diagnosis

Others

Advertisement & Communication

Hospitality

Educational Hubs

Tools and Technologies:

Python, OpenCV, Mediapipe, CVzone, PyCharm

OpenCV:

OpenCV is the most popular library for the task of computer vision, it is a cross-platform open-source library for machine learning, image processing, etc. using which real-time computer vision applications are developed.

MediaPipe:

Mediapipe is a cross-platform library developed by Google that provides amazing ready-to-use ML solutions for computer vision tasks. OpenCV library in python is a computer vision library that is widely used for image analysis, image processing, detection, recognition, etc.

CVzone :

CVzone is a computer vision package, where it uses OpenCV and MediaPipe libraries as its core that makes us easy to run like hand tracking, face detection, facial landmark detection, pose estimation, etc., and also image processing and other computer vision-related applications.

Steps that we perform to create Project

Import Libraries for Virtual Keyboard Using OpenCV:

Here we are importing the HandDetector module from cvzone.HandTrackingModule and then in order to make the virtual keyboard work we need to import Controller from pynput.keyboard.

Defining Draw Function:

keyboard = Controller()class Button:

def __init__(self, first_pos, text, btn_size=[85, 85]):

self.first_pos = first_pos

self.text = text

if text == ' ': # if text is space

self.btn_size = [284, 85]

else: # for other btns

self.btn_size = btn_size

Main Program for Virtual Keyboard Using OpenCV:

Here comes the important part.

while True:

success, img = cap.read()

# flip image, to avoid mirrored

img = cv2.flip(img, 1)

img = detector.findHands(img) # find hand

lmList, bboxInfo = detector.findPosition(img) # land marks

draw_all_buttons(img, buttonList)

# check for finger tip

if lmList:

for button in buttonList:

x, y = button.first_pos

w, h = button.btn_size

if x < lmList[8][0] < x+w and y < lmList[8][1] < y+h:

# dark btn colors

cv2.rectangle(img, button.first_pos, (x + w, y + h), (150, 150, 150), cv2.FILLED) # gray color

cv2.putText(img, button.text, (x + 18, y + 62), cv2.FONT_HERSHEY_PLAIN, 4, (0, 0, 0), 3)

l, _, _ = detector.findDistance(8, 12, img, draw=False) # distance between 2nd and 3rd fingers

print(l)

# click the particular btn

if l < 30:

# change btn colors

keyboard.press(button.text) # type on real keyboard

cv2.rectangle(img, button.first_pos, (x + w, y + h), (0, 255, 0), cv2.FILLED) # green color

cv2.putText(img, button.text, (x + 18, y + 62), cv2.FONT_HERSHEY_PLAIN, 4, (0, 0, 0), 3)

finalText += button.text

sleep(0.30)

cv2.putText(img, finalText, (165, 400), cv2.FONT_HERSHEY_PLAIN, 5, (255, 0, 0), 3)

cv2.imshow("Virtual Keyboard", img)

cv2.waitKey(1)

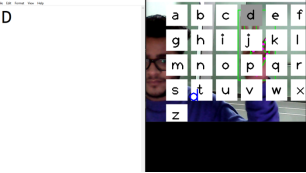

OUTPUT AND RESULTS:

FRMAE:

Inside the while loop the main function takes place, first we read the real-time input frames and store it in a variable called img. Then we pass that image to the detector.findHands() in order to find the hand in the frame. Then in that image, we need to find the position and bounding box information of that detected hand.

Here we can find the distance between the top point of our index finger and middle finger, if the distance between the two is less than a certain threshold, then we can type the letter on which we are indicating. Once we get the position then we loop through the entire position list. From that list, we find button position and button size and then we plot it on the frame according to a well-defined manner.

After that, we need to find the distance between the top point of our index finger and middle finger. In the above image, you can see the top points which we require are point 8 and point 12. Hence we need to pass 8, 12 inside a distance finding function in order to get the distance between them. In the above code you can see detector.findDistance() and there we passed 8, 12, and image in order to find the distance and we set the draw flag to false so that we do not need any line between the two points.

If the distance between the points is very less we will use press() function to press the keys. In the above code keyboard.press() and we are passing button.text in order to display that pressed key. And finally, we draw a small white rectangular box just below our keyboard layout in order to display the pressed key.

Once you execute the whole code it looks something like this.

After you bring the index finger and middle finger close to each other on top of a particular letter, you can type that letter.

Entire Code for Virtual Keyboard Using OpenCV:

Below is the entire code

import cvzone

import cv2

from cvzone.HandTrackingModule import HandDetector

from time import sleep

from pynput.keyboard import Controller

cap = cv2.VideoCapture(0)

cap.set(3, 1280)

cap.set(4, 720)

keyboard = Controller()

detector = HandDetector(detectionCon=0.8)

finalText = ""

keys = [["a","b", "c", "d","e","f"],

["g", "h", "i", "j","k","l",],

["m", "n", "o", "p","q","r",],

["s","t","u","v","w","x","y"],

["z"], " ", ""]

# all keys

# keys = [["q","w", "e", "r","t","y","u","i","o","p"],

# ["a", "s", "d", "f","g","h","j","k","l"],

# ["z", "x", "c", "v","b","n","m", " "],

# ["1", "3"]]

buttonList = []

def draw_all_buttons(img, buttonList):

for button in buttonList:

x, y = button.first_pos

w, h = button.btn_size

# draw keys

cvzone.cornerRect(img, (button.first_pos[0], button.first_pos[1],

button.btn_size[0], button.btn_size[0]), 20, rt=0)

cv2.rectangle(img, button.first_pos, (x + w, y + h), (255, 255, 255), cv2.FILLED)

cv2.putText(img, button.text, (x + 18, y + 62), cv2.FONT_HERSHEY_PLAIN, 4, (0, 0, 0), 3)

return img

class Button:

def __init__(self, first_pos, text, btn_size=[85, 85]):

self.first_pos = first_pos

self.text = text

if text == ' ': # if text is space

self.btn_size = [284, 85]

else: # for other btns

self.btn_size = btn_size

for i in range(len(keys)):

for x, key in enumerate(keys[i]): # enumerate return no of iterations

buttonList.append(Button([100 * x + 80, 100 * i + 10], key))

while True:

success, img = cap.read()

# flip image, to avoid mirrored

img = cv2.flip(img, 1)

img = detector.findHands(img) # find hand

lmList, bboxInfo = detector.findPosition(img) # land marks

draw_all_buttons(img, buttonList)

# check for finger tip

if lmList:

for button in buttonList:

x, y = button.first_pos

w, h = button.btn_size

if x < lmList[8][0] < x+w and y < lmList[8][1] < y+h:

# dark btn colors

cv2.rectangle(img, button.first_pos, (x + w, y + h), (150, 150, 150), cv2.FILLED) # gray color

cv2.putText(img, button.text, (x + 18, y + 62), cv2.FONT_HERSHEY_PLAIN, 4, (0, 0, 0), 3)

l, _, _ = detector.findDistance(8, 12, img, draw=False) # distance between 2nd and 3rd fingers

print(l)

# click the particular btn

if l < 30:

# change btn colors

keyboard.press(button.text) # type on real keyboard

cv2.rectangle(img, button.first_pos, (x + w, y + h), (0, 255, 0), cv2.FILLED) # green color

cv2.putText(img, button.text, (x + 18, y + 62), cv2.FONT_HERSHEY_PLAIN, 4, (0, 0, 0), 3)

finalText += button.text

sleep(0.30)

cv2.putText(img, finalText, (165, 400), cv2.FONT_HERSHEY_PLAIN, 5, (255, 0, 0), 3)

cv2.imshow("Virtual Keyboard", img)

cv2.waitKey(1)

THANK YOU

Comments

Post a Comment